Part 3 of our series on AI and Activism looks at the seven principles we developed in collaboration with Friends of the Earth for climate justice and digital rights campaigners.

Read or download the full report here. It further unpacks each principle and includes responsible practices and policy recommendations. This work was funded by Mozilla.

- Understanding Predominant Narratives in AI

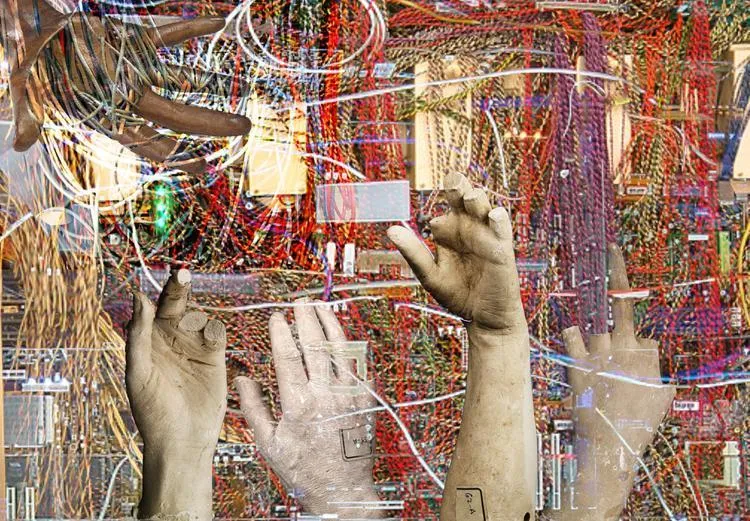

- Systemic complexities with AI

- Starting principles for the ethical use of AI

- How to be an Activist in a World of AI

Our hope is that you will use these principles to help navigate the dilemmas of AI use and communicate your approach and choices effectively — both with your networks and the wider public.

7 Principles

1. Exploring AI with curiosity creates opportunities for better choices.

The first principle encourages us to understand that there are types of AI that can help us find new and innovative solutions to problems we already face. Viewing this conversation from a perspective of learning and curiosity, and with a sense of playfulness, can help activists find happiness and hope in an ever complex technological and social landscape.

AI is an increasingly divisive, strange and intriguing set of technologies. Encourage critical thinking about AI use in your communities, discussing and questioning its use and, where appropriate, exploring simpler, more sustainable alternatives.

2. Transparency around usage, data and algorithms builds trust

Transparency is essential to address the environmental impacts of AI. It is also a requirement for public trust and to ensure accountability. Transparency helps us make informed-decisions. Therefore, greater transparency in how AI is developed, trained and used as well as in how we, as environmentalists and campaigners are using AI, can have a profound impact on how technology influences our climate and our societies.

Encourage openness in AI development and usage, providing clear information about training data, environmental impacts, and possible unintended side effects.

3. Holding tech companies and governments accountable leads to responsible action.

Governments need to apply safety and transparency regulations, with strict consequences for non-compliance. Environmental justice campaigners could promote holistic assessment and accountability frameworks to help examine wider societal and environmental impacts. (Kazansky, 2022). These would extend beyond carbon emissions to include resource extraction and social ramifications.

Holding one another to account and taking responsibility for our actions are essential to societal progress. We must hold both organisations and companies responsible for the environmental effects of AI, challenging misleading claims and ensuring responsible industry practices.

4. Including diverse voices strengthens decision-making around AI.

LLM training data is usually based on the somewhat-indiscriminate ingestion of mass amounts of data using datasets that represent the good, the bad, and the ugly of human discourse and bias. As LLMs tend to be a black box, we often cannot see the source of where bias is coming from, and a lack of diverse perspectives in AI development means that the needs of marginalised communities are not met, further entrenching existing disparities.

Being inclusive is an intentional act incorporating a range of perspectives to help ensure that marginalised voices are heard. To help build fair and equitable societies, we must work to prevent further bias and existing inequalities from being entrenched in AI systems.

5. Ensuring AI systems are sustainable reduces environmental impact and protects natural ecosystems.

When we talk about ‘sustainability’ in relation to AI, the most obvious targets are energy consumption, water use and emissions from data centres. However, we also need to talk about wider sustainability issues, including natural ecosystems, human well-being and the sustainability of outsourcing decision-making to machines.

Overreliance on technology in general can lead to the deterioration of skills, and AI is no different. Being sustainable goes beyond reducing energy usage. It includes careful consideration about the use of valuable resources, including human labour. Promote energy-efficient AI models and adopt an approach that reduces environmental and societal harms at every stage of AI’s lifecycle.

6. Community is key to planetary resilience

It is important to establish channels for genuine community participation in AI-related discussions and decision-making. This involves ensuring access to relevant information and the opportunity for communities to provide free, prior, and informed consent . Developing shared principles and guidelines that prioritise transparency, equity, and inclusivity will empower communities to actively contribute to shaping AI solutions that align with their values and priorities.

Technology is a tool communities can use to bring about a better world, but it is solidarity that gets us there. Encourage AI development to take place according to the needs of minority and majority communities, our planet, and not just market forces.

7. Advocating with an intersectional approach supports humane AI.

There is significant overlap between the communities that drive forward progressive environmental justice policies and those who advocate for digital rights. (Kazansky, 2022). In recent years many tech activists have used their technical skills on behalf of justice based organisations. These organisations have sought out tech activists to help them mitigate and understand digital rights issues like data security, misinformation, or digital attacks.

While we see the centralisation of power in the tech industry and technology stacks owned by just a few mega-corporations, we also see intersectionality becoming a critical component in climate justice and digital rights campaigning.

Read or download the full report here.

Technology has changed so much about our world. Now, AI is changing things again and at hyperspeed. We work to understand its potential implications for our communities, learners and programmes. Do you need help with AI Literacies, strategy, or storytelling? Get in touch!

References

Kazansky, B., et al. (2022). ‘At the Confluence of Digital Rights and Climate & Environmental justice: A landscape review’. Engine Room. Available at: https://engn.it/climatejusticedigitalrights (Accessed: 24 October 2024)